February 19, 2025

The Ultimate Guide to Quality Assurance Automation: From Manual Testing to AI-Driven Excellence

The Evolution of Quality Assurance Automation

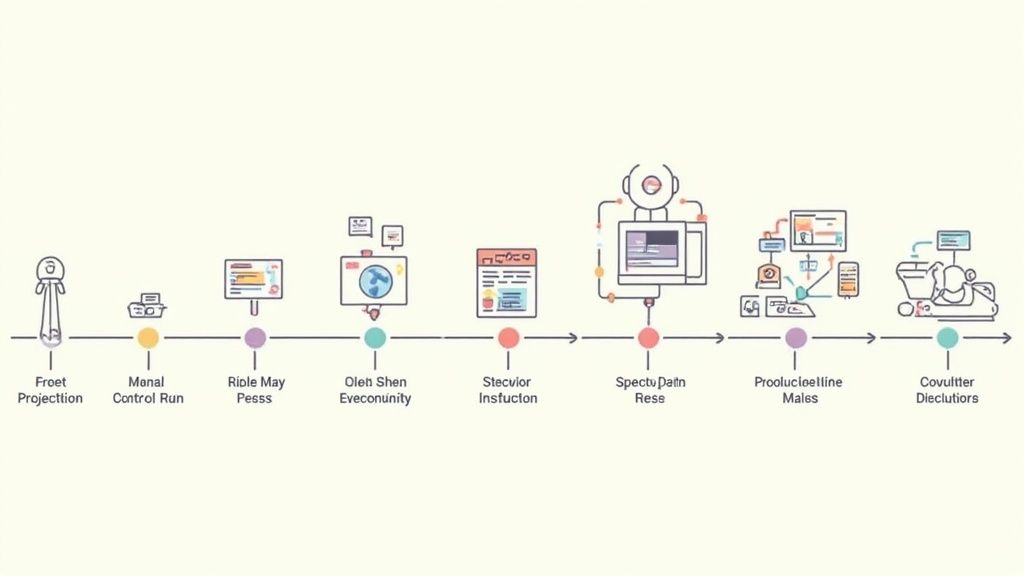

Quality assurance (QA) automation has dramatically changed over the last few decades. Initially, many saw QA as a manual process, primarily focused on checking off requirements. However, the increasing complexity of modern software demanded a more effective approach. Automation has become essential, reshaping testing practices across various industries.

From Manual To Automated Systems

In the latter part of the 20th century, automation started playing a key role in QA. The 1990s brought a significant shift with the introduction of tools like WinRunner and QuickTest Professional. These early tools automated functional and regression tests, offering faster and more accurate defect detection in complex systems. This automation was vital in reducing the time and resources needed for QA, allowing companies to keep pace with software development demands. Learn more about this evolution here.

As technology progressed, QA automation expanded its scope. Automated testing now covers a wider range of processes:

- Functional Testing: Automating the validation of application features.

- Regression Testing: Ensuring new code doesn't negatively impact existing functionality.

- Performance Testing: Measuring system performance through automated load and stress tests.

Key Innovations and Strategic Thinking

The shift to automated systems didn't replace strategic thinking. Successful teams found that combining automation with human oversight effectively manages increasingly complex systems. Today’s QA processes blend AI-powered tools with human expertise to ensure comprehensive testing and nuanced analysis.

Advances in AI have brought sophisticated analytics and predictive capabilities to QA. These technologies improve automation by:

- Identifying trends and patterns not easily seen through manual testing.

- Providing real-time feedback and immediate insights into system performance.

- Enabling autonomous testing that dynamically adapts to changing requirements.

Practical Transformations and Lessons Learned

Many companies have successfully transitioned to QA automation, resulting in greater testing efficiency. For example, one leading firm implemented AI-driven tools and drastically reduced regression testing time while maintaining accuracy. Another organization saw a 30% reduction in manual effort, allowing their QA teams to focus on strategic improvements.

However, automation adoption has its challenges. Teams often encounter obstacles like choosing the right tools, training staff, and integrating with existing systems. Overcoming these challenges requires:

- Comprehensive training for team members.

- Selecting appropriate tools aligned with current and future needs.

- Gradual implementation of automation for smooth adaptation.

These real-world examples highlight the importance of strategic planning and continuous learning for successful QA automation. As companies continue to innovate, the evolution of quality assurance promises even greater improvements in system efficiency and reliability.

Building a Strong Foundation: Quality Standards and Frameworks

A successful quality assurance automation program needs more than just automated tests – it requires a structured approach built on proven standards and frameworks. Organizations need to select and adapt these frameworks thoughtfully to achieve reliable testing that supports their specific needs.

Choosing the Right Framework

Selecting an appropriate framework is essential when starting quality assurance automation. Here are some common options:

-

Linear Framework (Waterfall): A step-by-step approach where each phase must complete before moving forward. Works well for straightforward projects with clear requirements.

-

Iterative Framework (Agile): Emphasizes adaptability and team collaboration, allowing changes throughout development. Best suited for complex projects with evolving needs.

-

V-Model: Builds on the waterfall model by closely connecting testing and development phases. Ideal when thorough verification is critical.

The best choice depends on your specific situation – a startup might benefit from agile's flexibility, while a large enterprise developing critical systems may need the structure of V-Model.

Implementing Your Chosen Framework

Once you select a framework, proper implementation requires:

- Clear role definition: Each team member must understand their QA responsibilities

- Strong communication: Regular and open dialogue keeps testing on track

- Appropriate tools: Select and integrate tools that enhance efficiency

- Strategic test planning: Define test cases, environments, and reporting methods

This organized approach helps quality assurance become a natural part of development.

Adapting Frameworks for Modern Needs

Quality assurance frameworks must evolve alongside software development practices. Many organizations now use continuous integration and delivery (CI/CD), requiring frameworks that work with rapid releases. Teams are merging agile approaches with DevOps practices to improve collaboration between development, operations and QA.

The rise of cloud and mobile technology has created new testing challenges that frameworks must address. The quality assurance field has come a long way since the 1980s when formal processes gained prominence. Key milestones include the Capability Maturity Model (CMM) in 1987 and ISO 9000 in 1986. Learn more about this history here.

Measuring Framework Effectiveness

Regular measurement helps improve quality assurance processes. Key metrics include:

- Defect detection rate: Number of bugs found through testing

- Test coverage: Percentage of code tested

- Time to resolution: Speed of bug fixes

- Test automation rate: Proportion of automated tests

Tracking these metrics helps teams optimize their processes and ensure quality standards are met. This data-driven approach keeps quality assurance aligned with business goals.

Emerging Trends in QA Automation

Quality assurance automation keeps evolving as new technologies emerge. Teams need to understand which new tools and approaches can add real value to their testing processes. The key is identifying practical applications rather than chasing every new trend.

The Rise of AI and Machine Learning

Artificial intelligence (AI) and machine learning (ML) are reshaping quality assurance automation. These technologies excel at finding patterns in test data and predicting potential issues. Teams use AI to generate targeted test cases, catch defects early, and improve the testing experience.

AI helps teams understand how users interact with applications and identify risky areas that need more testing focus. When code changes occur, AI can point out which parts of the application might break. Recent data shows 72.3% of QA teams are now using or exploring AI-based testing tools. Many teams are adopting AI-powered End-to-End testing platforms that combine multiple testing types – from performance to accessibility – in one system. Learn more about the latest testing trends here.

Advanced Analytics and Predictive Capabilities

Advanced analytics gives teams deeper insights into test results. Instead of just seeing what failed, teams can understand the root causes of issues. This leads to faster and more focused problem-solving.

Machine learning predictions help teams spot potential problems before they impact users. By studying past test data and current patterns, ML tools can highlight areas that need attention. This shift from finding bugs to preventing them makes a big difference in software quality.

Emerging Tools and Technologies

New QA automation tools keep appearing to meet different testing needs:

- Codeless Automation Tools: Make test automation accessible to team members who don't code

- Self-Healing Test Automation: Tests that fix themselves when small UI or code changes happen

- Cloud-Based Testing Platforms: Allow testing across many devices and setups without managing hardware

Preparing for the Future of Automated Testing

Success in QA automation requires staying current with changes. Here's what QA teams should focus on:

- Keep learning: Attend conferences, join workshops, and use online resources to build new skills

- Work across teams: Build knowledge in development and operations to improve team collaboration

- Put users first: Focus on testing the full user experience, not just individual features

Teams that adopt helpful new tools and methods while keeping focus on quality and efficiency will deliver better software. The key is choosing technologies that solve real problems and fit well with existing processes.

Implementing Effective QA Automation Strategies

Creating effective quality assurance automation requires more than just converting manual tests into automated ones. Success depends on choosing appropriate tools, creating solid test cases, and smoothly incorporating automation into development. Let's explore how QA teams can build strategies that get real results.

Selecting and Implementing the Right Tools

Picking the right automation tools is essential for QA success. Since different tools serve different purposes, match them to your specific project needs. Consider these key factors:

- Programming Language Support: Pick tools that work with your project's programming languages

- Platform Compatibility: Ensure tools work across your testing environments (web, mobile, desktop)

- Integration Options: Choose tools that connect well with your existing systems. For example, tools that fit into CI/CD pipelines enable testing with each code update

- Reporting Features: Look for tools that provide clear test results and highlight areas to improve

When implementing new tools:

- Train Your Team: Make sure everyone knows how to use the tools properly

- Start Small: Begin with pilot projects to gain experience before wider rollout

- Phase In Gradually: Add automation step-by-step to minimize workflow disruption

Designing Robust Test Cases

The success of QA automation heavily depends on well-built test cases. Aim for tests that are:

- Easy to Maintain: Simple to update as software changes to avoid expensive rework

- Reusable: Built to work across different test scenarios

- Data-Driven: Keep test data separate from test logic for more flexibility

Also focus on:

- Setting Priorities: Start with the most important tests that will have the biggest impact

- Assessing Risks: Create tests that address key vulnerabilities

- Managing Test Data: Have a clear plan for creating, storing and accessing test data

Integrating Automation Into the Development Lifecycle

QA automation works best when it's woven throughout development rather than tacked on at the end. Key practices include:

- Early Testing: Test from the start to catch issues sooner when they're cheaper to fix

- Continuous Integration: Add automated tests to CI/CD pipelines to check each code update

- Team Communication: Build strong connections between developers, testers and operations for faster feedback

Establishing Meaningful Metrics

Measuring automation success shows its real value. Track metrics like:

- Test Coverage: What percent of code gets tested? Higher coverage means more thorough testing

- Bug Detection: How many issues do automated tests find? Rising numbers suggest better testing

- Test Runtime: How long tests take to complete. Faster runs enable more frequent testing

These measurements help teams improve their automation approach and show stakeholders the benefits. With 93% of support teams reporting higher customer demands, strong QA automation is vital for success.

This systematic approach to QA automation leads to more efficient testing, faster feedback, and better quality software. It helps organizations adapt to changing needs while delivering products that truly work for their customers.

Building and Managing Automated Test Suites

Quality assurance automation requires well-designed test suites that deliver reliable results. Building these suites takes careful planning and solid practices to ensure they remain useful over time.

Structuring Your Test Architecture

A clear test structure forms the base of any maintainable test suite. Most QA teams organize tests by functional areas, keeping related tests together for easier updates. This modular approach helps teams quickly find and modify tests as software changes.

Teams often separate tests by type as well. Keeping unit tests, integration tests, and end-to-end tests in distinct groups allows focused testing during different development stages.

Effective Test Data Management

Good test data is essential for reliable automation. Many teams use central data repositories as a single source of truth, making it simpler to keep test data current and consistent.

Data-driven testing runs the same test with multiple data inputs, improving coverage without duplicating code. This works especially well for testing varied scenarios, like different types of customer interactions in call centers.

Maintaining Code Quality Within Automation Frameworks

Test code needs the same care as application code. Regular code reviews, version control, and coding standards keep test suites healthy. Poor test code leads to unreliable results and slows development. A well-maintained suite supports fast, accurate testing.

Designing Adaptable and Informative Tests

Good tests provide clear feedback when issues occur. Like a detailed medical diagnosis that speeds up treatment, precise test results help teams fix problems faster.

Tests must also keep up with software changes. Methods like the page object model (POM) and keyword-driven testing make tests more reusable and easier to update as applications evolve.

Strategies for Long-Term Test Suite Health

Keeping test suites effective requires ongoing attention. Regular reviews help remove outdated tests, similar to pruning a garden. Teams should also refactor test code to improve clarity and maintainability. This active approach ensures automated tests remain valuable throughout development. Following these guidelines helps QA teams build robust test suites that provide accurate results and support quality software delivery.

Measuring Success and ROI in QA Automation

Evaluating the business impact of quality assurance automation requires looking at both direct and indirect benefits. Companies need clear ways to measure value and justify ongoing investment in automation tools and processes.

Quantifying Time Savings and Quality Improvements

One key metric is time savings through automated testing. When regression tests run automatically overnight, QA teams can focus on complex testing during work hours. Research shows that automated functional testing typically reduces overall testing time by 25-30%.

Another crucial measurement is quality improvement. Automated tests provide reliable, repeatable results while minimizing human error. Finding defects early is essential since fixing issues becomes exponentially more expensive later in development.

Calculating ROI for Stakeholder Engagement

A basic formula for calculating automation ROI is:

[

\text{ROI} = \left( \frac{\text{Benefits of Automation} – \text{Cost of Automation}}{\text{Cost of Automation}} \right) \times 100

]

Count both direct benefits like reduced costs and faster releases, as well as indirect gains such as higher customer satisfaction. Breaking down the components helps build a stronger business case.

Frameworks for Tracking Benefits

While measurable metrics are important, don't overlook intangible benefits like improved team morale from reduced manual work. A complete evaluation framework should include:

- Tangible Metrics: Time saved, defect detection rates, test coverage, fewer production issues

- Intangible Metrics: Team satisfaction, better collaboration, customer feedback

Using tables helps communicate the full range of benefits:

| Metric | Tangible Benefits | Intangible Benefits |

|---|---|---|

| Time Savings | Faster release cycles | Reduced burnout |

| Defect Discovery | Higher defect capture rates | Confidence in product stability |

| Test Coverage | Greater percentage of application tested | Team satisfaction from higher trust |

Data-Driven Decisions and Strategies

Looking at testing metrics helps teams spot areas needing more attention. Real-time automation dashboards give quick insights for making informed choices about test coverage and resource allocation.

Success stories from early automation efforts make compelling cases for expanding automation programs. Show concrete results to gain support for growing your automation initiatives.

For call center managers looking to enhance client interaction quality, Call Criteria offers an ideal solution. Transform your customer service operations by integrating their advanced AI and quality assurance insights. Take the step to optimize your business outcomes today!